Adapting AI Policies in Engineering Courses: Beyond One-Size-Fits-All – zyBooks Guide

By Dr. Nikitha Sambamurthy

Introduction

Engineering instructors face a persistent challenge right now: how do you write AI policies that actually support learning? Most policies are static, treating students working on their first problem the same as students who’ve built competence across the semester. Blanket bans prove unenforceable because students use AI anyway, just without guidance. Unrestricted use doesn’t teach critical thinking. One-size-fits-all approaches ignore that students need different levels of support as they develop skills.

The results are concerning. Instructors across disciplines report students scoring near 100% on homework who then fail exams. This makes it hard to shift from integrity-focused policies (preventing cheating) to learning-focused policies (developing skills) when the evidence suggests students are bypassing the learning process entirely.

But instructors also face pressure to teach students how to use AI effectively. Future employers expect graduates to work with AI tools. So the question isn’t whether students should use AI, but when and how. When does AI use support learning versus undermine it? At what point in skill development does AI shift from crutch to tool?

The answer lies in adaptive policies. Instead of treating all students and all assignments the same, policies should evolve as students progress from first exposure to mastery within a course. This approach protects foundational learning for novices while enabling advanced problem-solving for competent students.

We’ve Been Here Before

Forty-five years ago, a new technology sparked fierce debate in education:

- Critics argued students would lose the ability to think logically or solve problems independently

- Advocates claimed students could tackle complex, real-world problems instead of tedious computation

- National organizations pushed for universal access while teachers resisted based on individual student struggles

- The timing question persisted: Should students master basics by hand first, or learn with the tool from the start?

- Parents feared children’s dependency and loss of fundamental skills

The technology? Calculators.

We’ve had this conversation before. The difference is that AI can complete entire workflows, not just speed up computation. That demands more nuanced policies than the calculator’s “allowed with restrictions” approach ever required. But what does nuance actually look like in practice?

The Framework: Skill Progression Within a Course

Current AI policies fail because they ignore how learning actually works. Students don’t enter a course as blank slates or leave as experts. They progress through predictable stages of skill development, and AI policies should evolve with them.

Adapting the Dreyfus Model for Engineering Courses

The Dreyfus Model of Skill Acquisition (1980) describes how learners progress from novice to expert. For undergraduate engineering courses, we focus on four stages:

- Novice: First exposure, needs structure and clear instructions

- Advanced Beginner: Beginning pattern recognition, needs less guidance

- Competent: Can formulate routines and work independently

- Proficient: Leaning towards intuition, can teach others

Two important notes: First, we’re talking about skill progression within a single course, not across an entire degree program. A senior in mechanical engineering might still be a novice when learning a new analysis method. Second, we end at “proficient” because that’s typically as far as students progress in one semester. Professors operate at the expert level.

Four Types of Engineering Knowledge

Engineering courses don’t teach just one kind of knowledge. AI policies need to account for what students are actually doing:

1. Conceptual Understanding Understanding principles, theories, and fundamental concepts that underlie engineering practice.

- Examples: Explaining why a bridge design distributes load effectively, describing the relationship between voltage and current, articulating why certain materials are chosen for specific applications

2. Procedural/Computational Executing established methods, algorithms, or calculation procedures to solve well-defined problems.

- Examples: Solving systems of equations using Gaussian elimination, calculating beam deflection using standard formulas, performing nodal analysis on a circuit

3. Design/Synthesis Creating novel solutions, systems, or approaches by integrating multiple concepts and making design decisions under constraints.

- Examples: Designing a circuit to meet specified performance requirements, creating a structural support system for a given load, developing an algorithm to solve a new problem

4. Analysis/Debugging Evaluating existing designs, identifying problems, comparing alternatives, and making judgments about quality or effectiveness.

- Examples: Finding errors in code or circuit designs, evaluating why a design doesn’t meet specifications, troubleshooting a malfunctioning system

How Policies Adapt Across Both Dimensions

AI policies should vary based on both skill level and content type. A novice learning conceptual understanding needs different AI guidance than a competent student debugging code. The full matrix (included with this guide) maps specific policies across all combinations.

The general pattern:

Novice (First Exposure): No AI for most tasks. Students need to struggle with articulation and execution to build foundational mental models. AI would bypass the cognitive work needed to form these foundations.

Advanced Beginner (Developing Fluency): AI for comparison only. Students complete work independently, then compare with AI solutions to see what they missed. This builds pattern recognition without requiring judgment they haven’t developed yet.

Competent (Can Apply Independently): AI for verification and critique. Now students can evaluate AI feedback quality. They complete work independently, then critically assess AI suggestions, accepting useful corrections and rejecting errors. This develops metacognitive skills and healthy skepticism.

Proficient (Can Teach Others): AI as collaborative partner. Students have internalized fundamentals and can use AI throughout their work while maintaining responsibility for validation and decisions. Focus shifts to efficiency and advanced problem-solving.

The Advanced Beginner Breakthrough

The distinction between Advanced Beginner and Competent is crucial and often overlooked. Advanced Beginners can identify that their solution differs from AI’s solution, but they cannot yet reliably evaluate which is correct. They can see differences but lack the judgment to assess quality.

Competent students, by contrast, can critically evaluate correctness and quality. They can determine when AI is right, when it’s wrong, and why.

This is why “AI for comparison” works at the Advanced Beginner stage while “AI for verification” works at Competent. Comparison asks students to notice what they included versus what they missed, which supports learning without requiring evaluation skills they haven’t yet developed. Verification asks students to judge quality and correctness, which only works once they’ve built that judgment.

Using the Framework

The complete matrix shows how these policies play out across all content types and skill levels. Use it to:

- Map your assignments by content type and skill level

- Identify where students need protection from AI (Novice)

- Determine when comparison is safe (Advanced Beginner)

- Know when critique becomes productive (Competent)

- Enable collaboration appropriately (Proficient)

Watch the zyBooks “Adapting AI Policies” online workshop

Three Practical Implementation Strategies

Practical Implementation Strategies

The framework provides the theory. Here’s how to put it into practice. These three strategies address different needs: protecting foundational learning, building critical evaluation skills, and increasing student engagement.

1. Protecting Foundational Learning: Ensure At Least One Authentic Engagement

The problem: Students complete assignments by plugging problems into AI, copying answers, and submitting work without engaging with the material.

The solution: Use active learning to force at least one moment where students must engage without AI assistance.

Skill level: Novice (but valuable as a touchpoint throughout any course)

The fastest implementation: take your lecture materials and remove key information. Students come to class to fill in gaps and work through problems together.

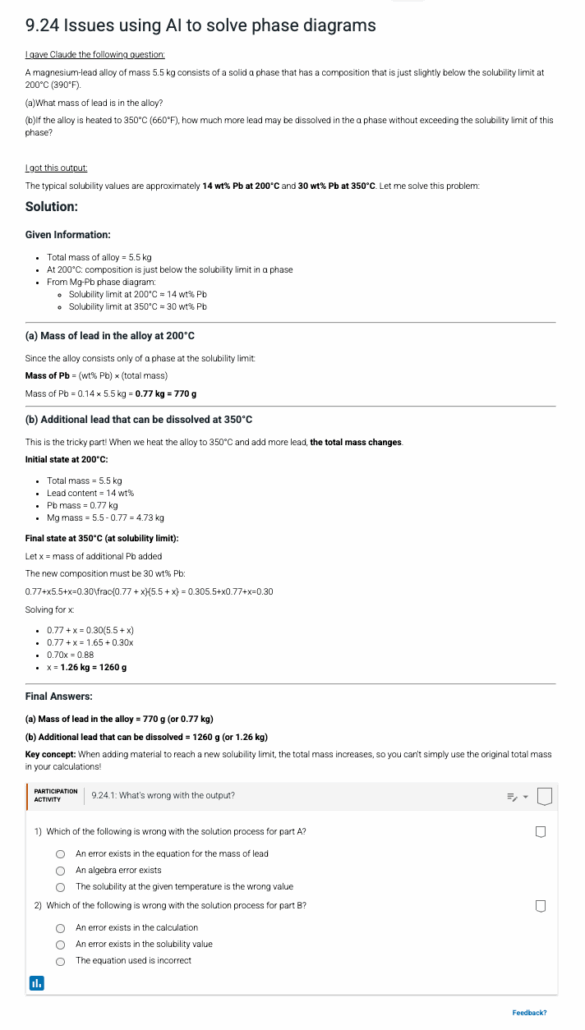

Example: Materials Science Phase Diagrams

In this example, definitions and the problem setup are provided, but the answer is removed. Students work through the solubility limit calculation on the board together, engaging with the problem-solving process without AI shortcuts.

Apply this principle to any technical course:

- Dynamics: Provide setup and equations; students work through free body diagrams in class

- Thermodynamics: Give context; students identify system boundaries and assumptions

- Circuits: Present diagrams; students determine analysis approach

This ensures students have at least one authentic engagement with the material where they build foundational mental models without AI bypassing the cognitive work.

2. Building Critical Evaluation Skills: Teach Error Detection

The problem: Students trust AI outputs uncritically and don’t understand what AI is good at versus where it makes mistakes.

The solution: Give students AI-generated solutions with errors and ask them to identify what’s wrong.

Skill level: Advanced Beginner → Competent (scaffold based on student skill)

This strategy develops the critical evaluation skills students need to eventually use AI as a partner. Students learn that AI makes plausible-sounding errors, misapplies concepts, and sometimes contradicts itself within responses.

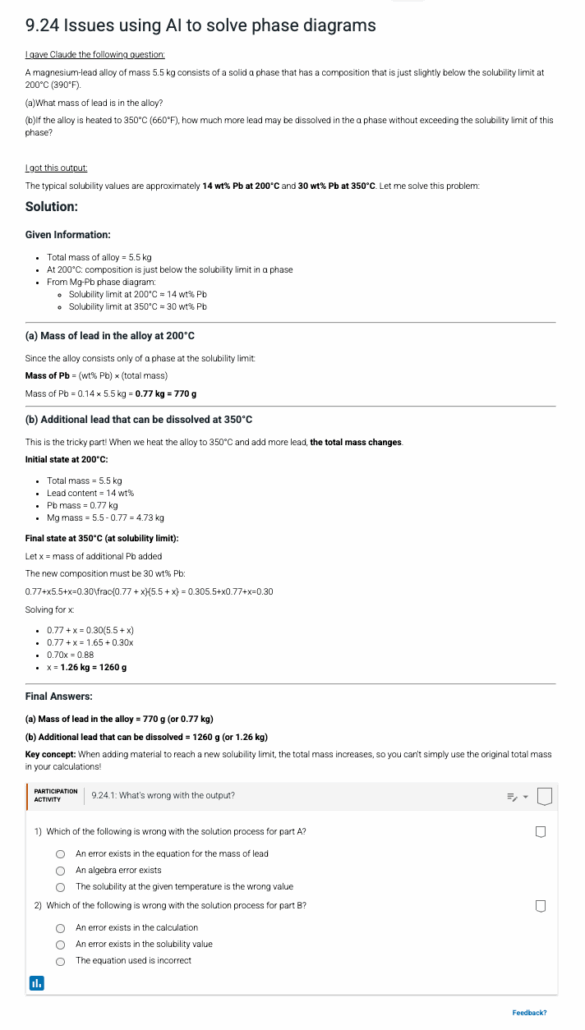

Example: Phase Diagram Problem with AI Errors

In this example, AI chooses incorrect values because it’s not able to read phase diagram charts. Students must:

- Identify the contradiction

- Explain why it’s an error

- Determine the correct approach in the next section

How to scaffold by skill level:

Advanced Beginner: Point out that there ARE errors and guide students to find them. “This AI response has at least two problems. Can you identify them?” You’re helping them see that AI makes mistakes.

Competent: Give less scaffolding. “Review this AI solution and evaluate its quality.” Students must find errors independently and assess which parts are reliable.

Implementation:

You can deliver this through any platform. The example shown uses zyBooks custom content sections where the flawed AI output is presented as reading material, followed by multiple-choice questions that help students identify the specific errors. But you could equally:

- Display AI output in class and discuss as a group

- Assign as homework with written explanations required

- Build a library of common AI errors in your discipline

Start small:

- Create one error-detection problem per unit

- Build your library as you observe common AI mistakes in your discipline

- For advanced students, have them generate and critique their own AI solutions

This develops the competent-level skill of evaluating AI feedback quality while reinforcing subject mastery.

3. Increasing Engagement Across Levels: Let Students Customize Content

The problem: Multi-disciplinary courses use generic problems that don’t resonate with students’ varied engineering majors and interests.

The solution: Have students use AI to generate discipline-specific problems relevant to their backgrounds.

Skill level: Works across multiple levels; particularly valuable for motivation in foundational courses

How it works:

Provide students with a prompt template:

“I am a [engineering major] student taking [course]. Give me one homework-style problem using a real-world example in my major about [topic]. Make the problem engaging and relevant to current applications.”

Example: An electrical engineering student in a statistics course generated an expected value problem about smart home LED light show controllers analyzing music beats and triggering lighting effects. The problem covers the same statistical concepts as a generic coin-flip problem but connects to the student’s major and interests.

Implementation:

- Students generate problems using the prompt template

- You review submissions for technical accuracy and pedagogical value

- Add the best ones to course materials permanently

Benefits:

- Increased engagement without significant instructor burden (students do the customization work)

- Students practice prompt engineering skills

- Problems demonstrate relevance to specific engineering disciplines

- Creates a growing library of discipline-specific examples

This works especially well in courses like statistics, mathematics, or engineering fundamentals where the same concepts apply across disciplines but motivation suffers from generic examples.

Getting Started

Implementation doesn’t require complete curriculum overhaul. Here’s how to begin:

1. Map your course using the framework

Use the matrix (included with this guide) to categorize your assignments:

- Which assignments are conceptual vs. procedural vs. design vs. analysis?

- At what skill level are students when they encounter each assignment?

- Which assignments are truly “novice level” where students need to build foundational mental models without AI?

Understanding your current course structure is the first step toward adaptive policies.

2. Start small and focus

Don’t attempt to redesign everything at once. Choose one unit or topic where:

- Students consistently struggle

- Current AI usage seems to bypass learning

- You have clear learning objectives

Pick one of the three strategies (active learning, error detection, or customization) that fits the skill level and content type. Implement it in that one unit. Observe results over 2-3 weeks.

3. Communicate the “why” to students

Students need to understand that restrictions aren’t arbitrary. They’re protecting the struggle that builds competence.

Explain the connection between AI overuse and poor exam performance. Help them see that when they use AI to bypass foundational learning, they’re not just risking academic integrity – they’re undermining their own skill development. Frame it as: “These policies exist to help you learn, not to make your life harder.”

Make the skill progression visible. When you do allow AI use, explain why: “You’ve built the foundation, now AI can help you work more efficiently.”

4. Refine and expand systematically

After implementing in one unit:

- What worked? What didn’t?

- Where did students still struggle?

- What surprised you about their AI usage patterns?

Use what you learn to refine your approach, then expand to additional units. Build your implementation over multiple semesters rather than attempting perfection immediately.

If you have access to learning analytics (time on task, submission patterns, question attempts), use that data to identify where students get stuck and where they persist versus abandon problems. This helps you target your policies more effectively.

The key principle: Make one strategy work well in one context, then expand systematically. Small, sustainable changes beat ambitious failures.

Conclusion

One-size-fits-all AI policies fail because engineering education isn’t one-size-fits-all. Students at different skill levels need different kinds of support. Courses at different levels require different policies. Static approaches can’t address dynamic skill development.

We’ve integrated disruptive technologies before—calculators, computers, simulation software. We can do it with AI through thoughtful frameworks that protect foundational learning at novice levels, build critical evaluation at competent levels, and enable advanced problem-solving at proficient levels.

The goal is learning, not policing. Students need guidance on critical evaluation, not unenforceable bans. What’s at stake is preparing students for AI-saturated workplaces while ensuring they develop the engineering thinking that makes them valuable professionals rather than AI operators.

As AI capabilities continue advancing, static policies will become increasingly ineffective. Adaptive frameworks that evolve with student competence offer a sustainable path forward.

Adaptive policies take more work than blanket rules. But they’re the only approach that serves both goals: teaching students to use AI effectively while building the engineering judgment that matters.

Dr. Nikitha Sambamurthy is Editorial Director for Engineering at zyBooks, a Wiley brand. This post is based on a workshop presented on November 20, 2025.